A brief overview on how we overcome some of the more interesting

challenges on the

MoMu

project.

Featuring some insights on the look and feel,hardware, multiscreen

rendering & video playback.

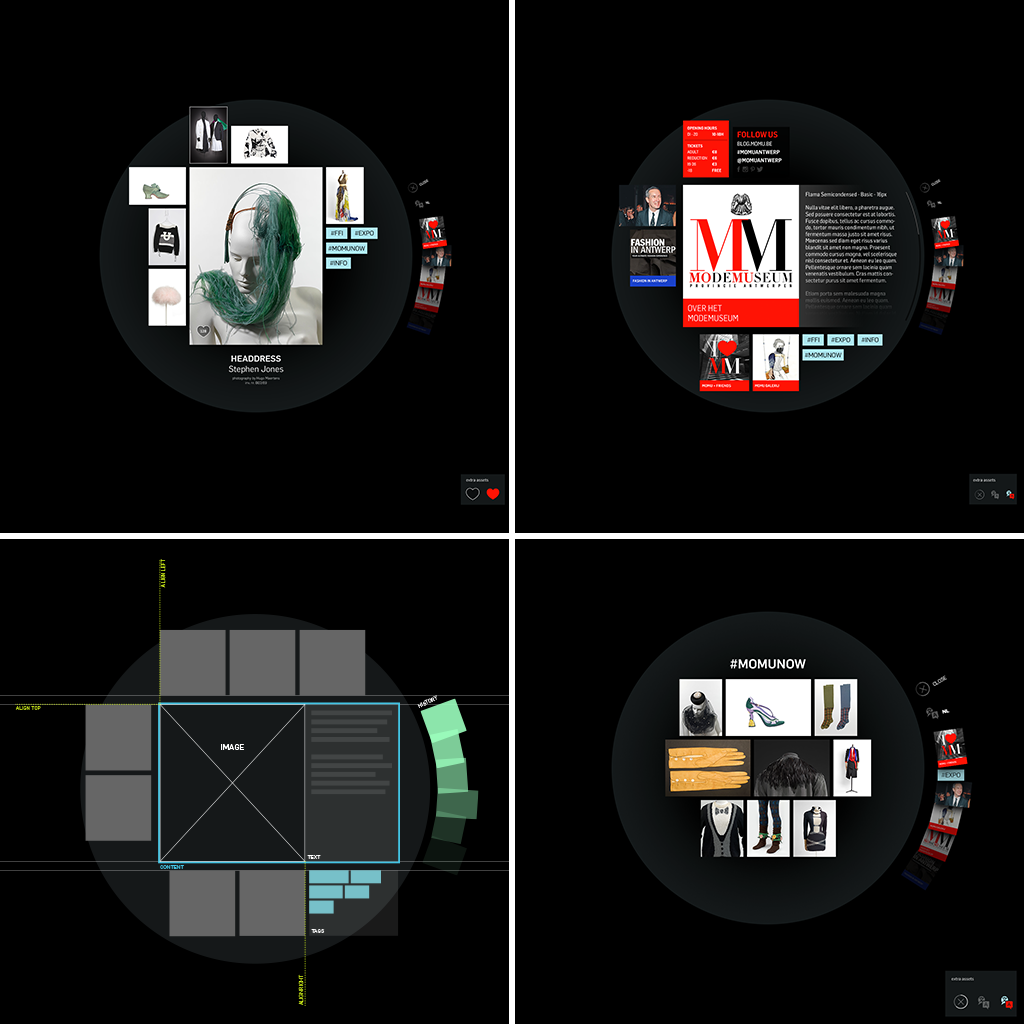

Look and feel

It was pretty clear that it wasn't possible to capture the look and

feel in a series of photoshop/illustrator files. Therefore we

worked with a irritative design process. The designer would provide

us design elements which were integrated into a working prototype.

The client provided regular feedback on the look and feel of the

prototype. Working with irritative process demands a great amount

of trust from the client side. Clients are used to see the complete

design before the development starts. It was nice to work with a

client like MoMu who gave us this trust.

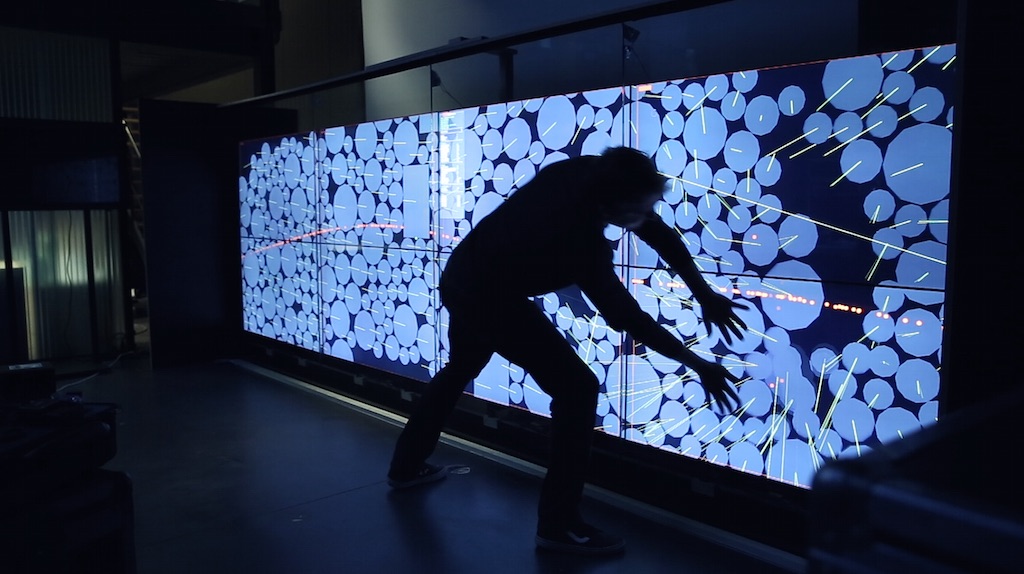

Motion

All collision, movement and repulsion is done with box2D.

As seen below every item has a velocity (yellow lines) and a

different gravity point (red dots). The attraction or gravity is

set by a perlin noise flow.

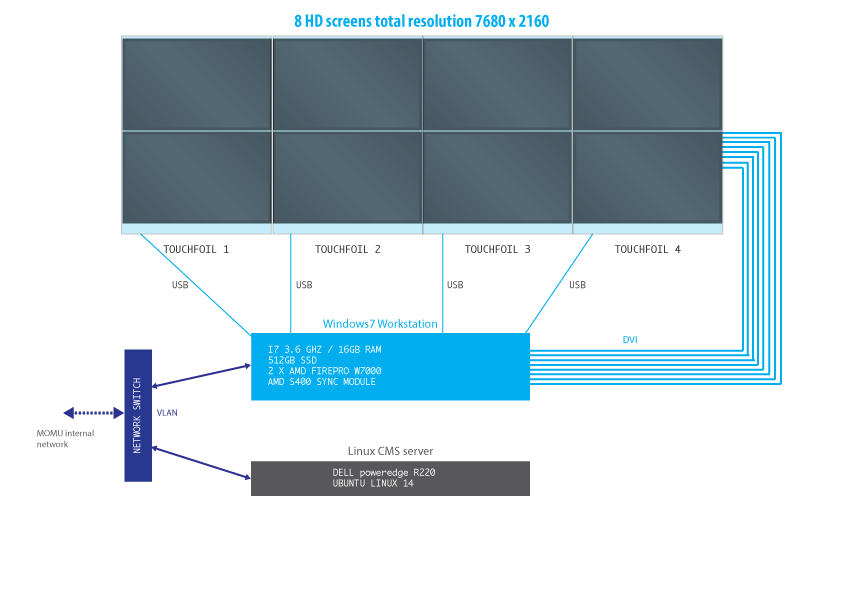

Hardware

One of the first challenges was the touch hardware. At this scale

optical solutions are very common because they scale well.

But since the wall is placed outside it wasn't an option for us.

From previous setups we knew that dust and sunlight would have a

bad impact on the optical sensors specially in the long run. So

capacitive touch was the best option left. We used 4 capacitive

foils which where electrical isolated to reduce the interference

between them. Each foil is connected to the workstation where we

merge all the data into a global coördinate system.

We have nice experiences with multi-screen projects running of one

Eyefinity card. Unfortunately there are no cards available with 8

outputs.

For this setup we used two AMD FIREPRO W7000 cards and a S400

module to keep the refresh rate in sync on all the screens.

Current setup runs day and night so we made sure all components

where workstation quality. So far running non-stop for 6 months and

counting.

Hardware wiring

Software

On top of our priority list was the 8xHD rendering at 60fps.

We tried:

- network setup with OSC which was lagging with fast

movements

- glShareLists didn't work out

Finally we discovered that we could simply use two fullscreen

Cinder windows, one window per videocard. Previously the framerate

dropped dramatically when a window was placed on two screens which

were connected to different GPU's. So we grouped the left side (2x2

physical screens) and connected them to them same graphic card and

grouped them as one desktop in the ATI configurator and did the

same for the other side.

Little side effect, we needed to upload our textures per

window/OpenGL context.

Other improvements we did to maximise performance:

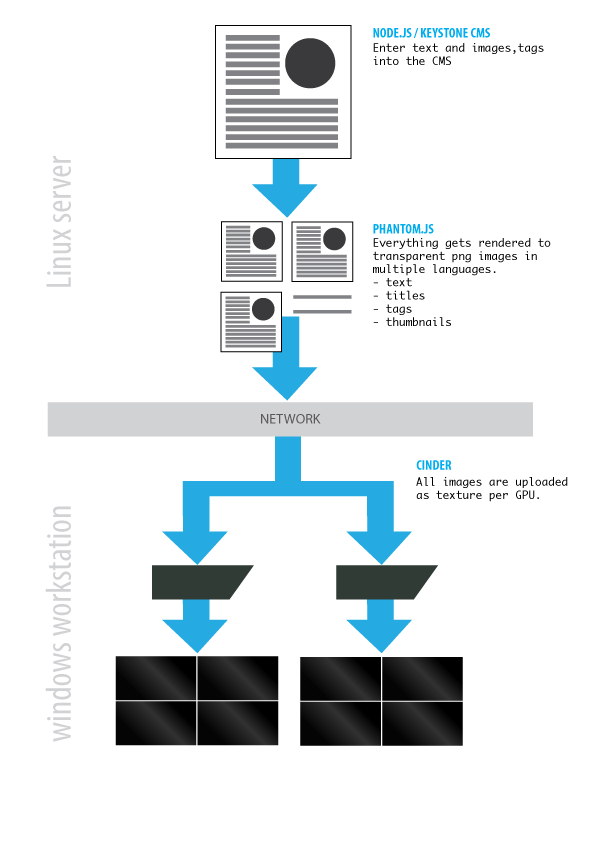

- prerender all text and graphics with Phantom.js on the backend

server

- used DXT for video playback

- used threading for all I/O operations

Image prerendering and DXT video took some disk space and resulted

in a very large number of files. But it gave us a really big

performance boost compared to the relative cheap diskspace. We are

confident that we could easily upscale this way of working to even

more screens without a big performance hit. Only disadvantage or

prerending would be that we have to process the whole cms again in

multiple languages if we change something in the text layout.

Currently this takes 30 minutes for 10.000 images.

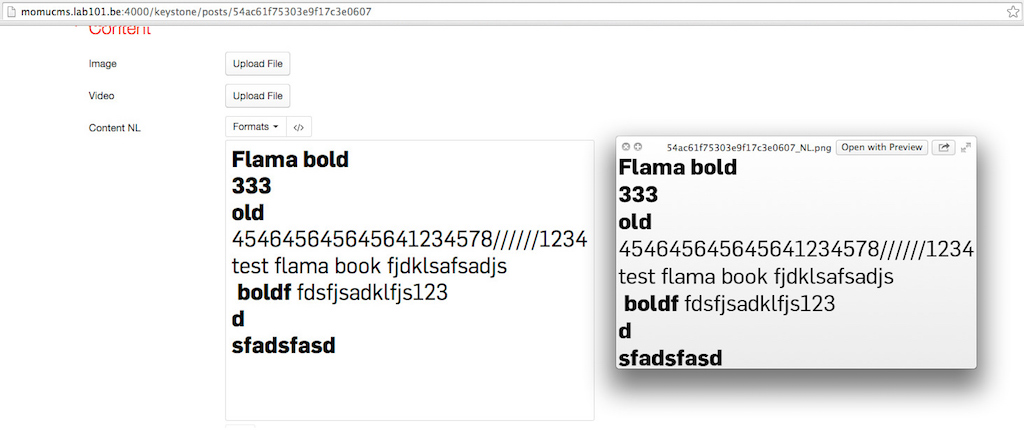

Text-render from the wysiwig editor to a png image.

We managed to get a almost perfect transition from the wysiwyg

editor to the image output.

On the left the CMS and on the right the rendered image for the

Cinder front-end.

The path from text to the screen.

Video render

Video render is a common problem in a lot of OpenGL frameworks like

Cinder & openFramework setups. We tried many solutions but a

lot of them where to slow or unstable with multiple instances

and/or threads.

We consulted our genius friend

Diederick Huijbers who has

worked on video encoding and decoding for a while. He wrote a

platform independent DXT encoder and decoder. Which we converted to

Cinder-DXT

block.

The benefits of using DXT.

- the codebase is rather small and readable compared to other

solutions

- our implementation is fully open so no crashes in closed

dll's

- we could do all heavy lifting in a thread and then upload the

pixels per GPU

The disadvantages of using DXT.

- conversion takes a while

- takes really a lot of disk space